Anaesthesia (from Greek, ‘loss of sensation’) refers to a temporary drug-induced state of diminished or completely eliminated sensory perception. Several forms exist, from the loss of sensation in a localised area of the body (regional anaesthesia) through to the total loss of conscious awareness via a general anaesthesia – effectively a reversible coma.

General anaesthesia is undoubtably of the most profound feats of modern medicine. The technique enables the most gruelling surgical procedures to be performed without the slightest pain or memory.

However, despite doctors routinely using general anaethesia for the last 150 years, exactly what transpires inside our brains during this curious phenomenon remains a mystery.

Understanding this peculiar enigma could help unveil the true meaning of consciousness itself, a concept scientists and philosophers alike have grabbled with for centuries.

A Brief History

Attempts at producing a state of unconsciousness equivalent to the modern general anaesthesia can be traced back deep into recorded history in ancient writings from great civilisations across the globe – the Sumerians, Babylonians, Romans, Egyptians and Chinese (to name a few).

Primitive anaesthesia drugs were herbal concoctions, usually consisting of extracts derived from the opium poppy, mandrake, jimsonweed, maruijuna and alcohols. Such remedies did not, however, ‘turn out the lights’ of consciousness, only going as far as numbing the pain and inducing a somewhat sleepy state. Sometimes, the patient were even knocked unconscious with a targeted blow to the head. People would often opt for certain death rather than enduring the intolerable pain of crude surgical procedures.

The renaissance era heralded a golden age for advancements in science. Revolutionary discoveries were made in human physiology- from the detailed anatomical sketches by the legendary polymath, Leonardo Da Vinci (1452-1519) to the pioneering discoveries in cardiology by the English physician, William Harvey (1578-1657).

Despite this acceleration in anatomical knowledge, it was not until the 18th and 19th centuries that significant advancements in general anathesia were made. For example, the English scientist, Joseph Priestly isolated nitrous oxide (‘laughing gas’) in the early 18th century. The chemist, Humphry Davy, working in conjunction with Priestly, observed that when inhaled, the gas was “capable of destroying physical pain.”

Further discoveries in pharmacology, alongside the development and careful refinement of surgical practices has lead to general anaesthesia as we know it today. Although there are a diverse range of anethestic drugs available, many still contain derivatives of early substances such as morphine and nitrous oxide.

Looking back on how general anaesthesia has come, we can be grateful we were born in an age where surgery is not accompanied by the tortured screams of intolerable pain.

Dimming the Lights

Consciousness is often thought of as ‘all or nothing’ – a state that can be activated by flipping on some metaphorical switch.

However, after adminsiatrtion of anathetisa drugs, the patient drifts gradually into unconsioussness, making the process more akin to the slow dimming of lights. The journey into an unconscious state can be divided into four distinct stages: ‘induction’ (light-headedness but maintaining conversation), ‘excitement’ (a burst of energetic and delirious behaviour accompanied by an irregular heart and respiration rate), unconsciousness (eye roll back, pain reflexes are diminished, heart and respiration rates steady) and the final ‘overdose’ stage where the patient has fallen so deeply into comatose that they require both cardiovascular and respiratory support.

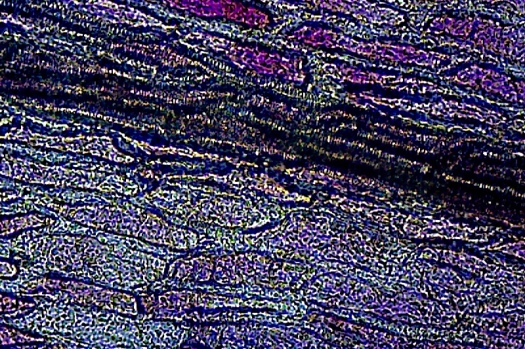

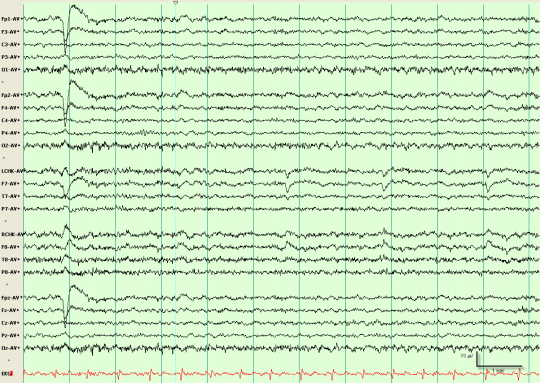

Anaethesisits can monitor brain activity using an electroencephalogram (EEG). This sophisticated tool allows measurement of neural activity as a patient transitions from a conscious to unconscious state during general anatheisia.

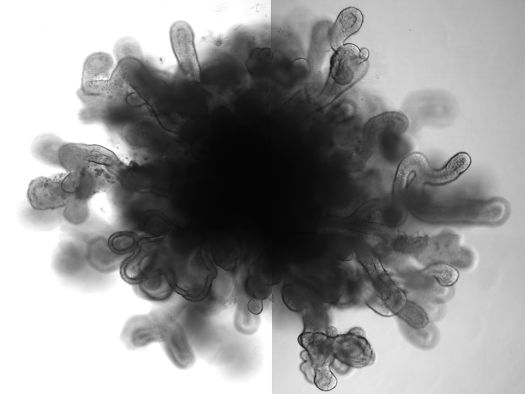

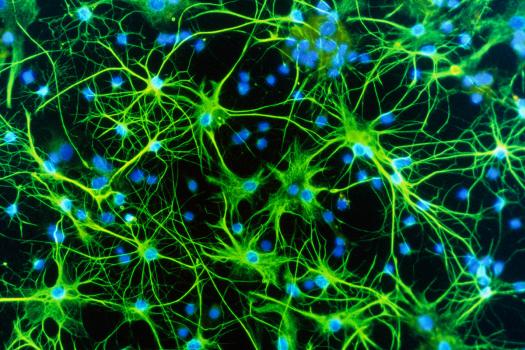

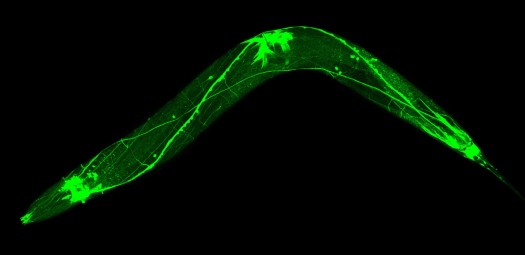

The galaxy of billions of neurones making up the active brain generate electrical impulses which can be detected by the electrodes of an EEG. The collected electrical signals are transmitted to a computer which translates them into oscillating patterns of peaks and troughs – brain waves.

As an aneathesised patient traverses into the world of unconsciousness, their normal high frequency but low intensity brain wave pattern mutates into one with high intensity bursts at lower frequencies. Curiously, these high intensity appear to manifest at regular intervals, as if brain processes are occurring in an organised fashion.

How does it work?

So, the general anaesthesia has transformed surgery from an agonising nightmare to a gentle slumber. But the mechanism underpinning this transformation broadly remains a mystery. Perhaps, however, this isn’t so surprising: if we can’t define consciousness, how can we begin to comprehend its disappearance?

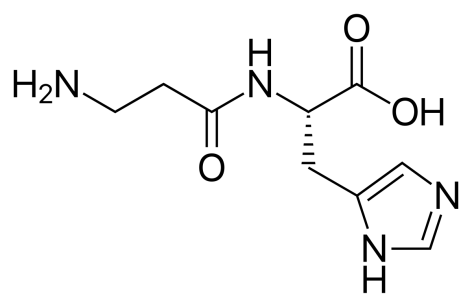

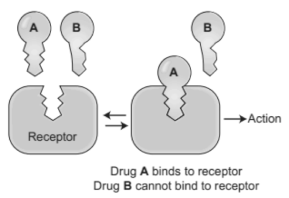

It is widely known that drugs work by binding snugly into receptor proteins on or inside our cells, bringing about a response or actively preventing one. This can be visualised as a ‘lock and key’ concept: the drug molecule a carefully designed key which snugly fits into the receptor ‘lock’.

However, to submerge a patient into such a deep abyss of mental and physical paralysis, aenthesiaologys rely not on one key, but an extensive list. Aesthetic agents range from bulky complex molecules like steroids to the inert gas, xenon which exists as single atoms. Surely the diverse contents of this curious collection do not occupy a universal lock?

For a long time, the general consensus was that instead of the ‘lock and key’ mechanism, anaesthetics work by physically disrupting the hydrophobic domain of the phospholipid bilayer of brain cells i.e. the ‘fatty’ regions of brain cell membranes. This idea was coined ‘lipid theory’ and was rooted in the observation that the potency of aesthetics correlates markedly with their lipophilicity (i.e. ability to dissolve in oils).

In the 1980s, however, a shadow of doubt once again descended upon the biochemical anatheictic mechanism when simple test tube experiments revealed the ability of aenethetics to bind to proteins in the absence of cell membranes, suggesting cell membranes may have little to do with general anaesthesia.

Further weakening the lipid hypothesis, scientists testified to the fact that cell membrane integrity can also be disturbed by even small deviations from our body’s ideal temperature range, yet this does not induce a state of deep unconsciousness.

The primary reason for the correlation between anaesthetic drug lipophilicity and potency is now thought to stem from the greater ease at which lipophilic molecules can penetrate through the blood-brain barrier and except their effects on neurones inside our brains.

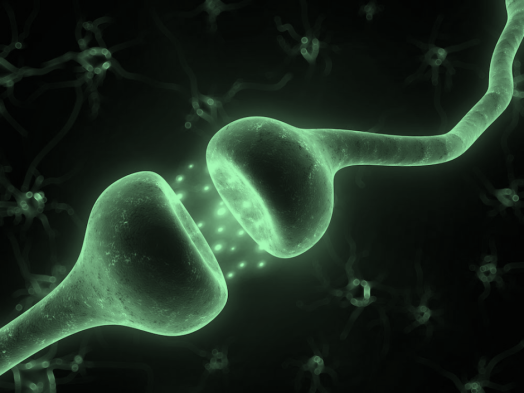

More recent studies have demonstrated that general anaesthetics may interact directly with hydrophobic sites of certain membrane-embedded proteins throughout the central nervous system (CNS). On binding, the drug causes a structural changes in the membrane protein. And since protein shape is so intimately linked with function, this action makes communication between nerve cells across the CNS (i.e. the transmission of electrical impulses across synapses) more difficult.

This hypothesis supports the idea that instead of completely shutting down brain activity, aesthetic drugs meddle with the brain’s internal communication. Perhaps it is the idea of internal communication within the brain then, that underpins consciousness?

Unfortunately, the challenging nature of obtaining structural information about hydrophobic membrane proteins, and thus their interaction with drug molecules, means it remains unclear as to how the anaesthetics truly excert their effects at the molecular level. Further complicating matters, studies have demonstrated that inhaled aesthetics do not interact by the specific binding (lock and key) mechanism, instead, they loosely associate with membrane proteins to disrupt their dynamic modes of motion necessary for function.

A jigsaw of consciousness

One of the difficulties in studying consciousness is the lack of a universally accepted definition for the phenomenon. After all, what is it like to see red? To taste chocolate? These questions seem to make sense but, delving deeper, you will realise an answer does not exist.

Perhaps a good place to start with defining consciousness is the statement: “I think, therefore I am” – basically, you cannot logically deny your mind (conscious awareness) exists without actively using your mind to do the denying – effectively, consciousness is the faculty that perceives that which exists. This deceptively simple proclamation was composed by the ‘Farther of Modern Western Philosophy’ and golden age mathematician, Rene Descartes (1596 – 1650).

However, the mechanism by which this perceiving of ‘that which exists’ arises, the biochemical ‘spark’ that stimulates a kaleidoscope of colourful sensations – just how consciousness arises – is a mystery. This ancient conundrum has been dubbed the ‘hard problem.’

Many scientists believe that by systematically charting the breakdown of consciousness during a general anaesthesia, some light may be shed on the answer to the ‘hard problem’ of consciousness.

As we discovered earlier, findings have revealed that anaetheics work through acting on multiple different protein receptors to block the firing of neurones throughout our brains. It appears that the resulting disharmonisation of the brain’s internal communications is a vital element in achieving general anaesthesia.

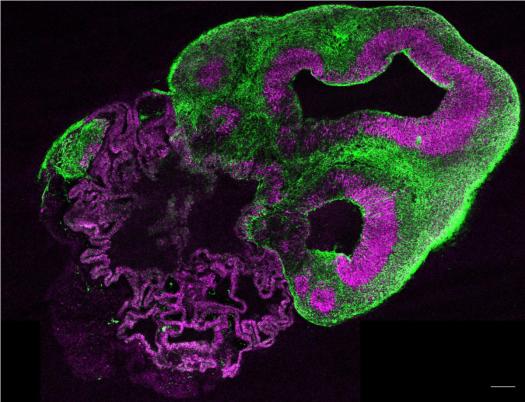

Based on the above observation, it seems that consciousness is not rooted within a discrete region or receptor of the brain – instead, it is a widely distributed phenomenon. EEG studies have supported this idea by indicating the breakdown in communication between the front and back of the brain during general anaesthesia.

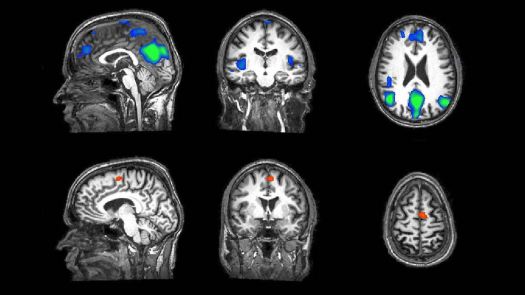

Another intriguing observation comes from functional magnetic resonance imaging (fMRI) studies, a powerful brain imaging tool capable of measuring neural activity by detecting changes in blood flow. Blood flow will increase when a specific area of the brain becomes more active as it requires more oxygen, delivered via the blood.

fMRI Studies have shown that in anaesthetised patients, small ‘islands’ of neural tissue are active in response to external stimuli such as light or sound. Despite this ability of the brain to detect these stimulus, the patient remains unconscious – somehow, the sensory information fails to be processed and integrated into an overall awareness.

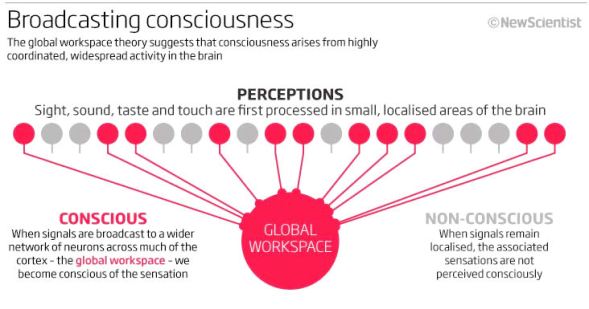

This analysis strongly supports the ‘Global Workspace Theory’ (GWT) of consciousness as a very basic initial explanation to the hard problem. The theory proposes that sensory information is first unconsciously processed locally in individual brain regions. Then, each region ‘broadcasts’ their signals to a network of branching neurones which begin firing in synchronicity throughout the brain.

Therefore, according to GTW. it is the complex interactions between discrete brain regions that integrates an overall signal to an external stimulus and produces an awareness to this stimulus – consciousness.

Charting and understanding the loss of consciousness during general anaesthesia may not only illuminate the nature of the conscious mind but also deepen the currently patchy understanding of dampened or altered sates of consciousness, for example, in those suffering from depression or schizophrenia. Research in this field may also lead to the development of improved techniques for detecting brain activity, and thus the emotions or needs, of people in a vegetative state, i.e. a conscious mind imprisoned in a paralysed body.

It is clear then, that research into the mechanism underlying general anaesthesia is a worthy endeavour for both tackling the age-old elusive ‘hard problem’ of consciousness, and, at the opposite end of the spectrum, developing treatments to directly improve peoples’ lives.

The art of anaesthesia is truly remarkable. Every day across the globe, millions of people are guided to the brink of nothingness, without us really knowing how. And then lead safely back home again.